A few months ago, Anthropic released Claude Code, a highly capable programming agent. Soon after, a user modified it into Clawdbot, a free, open-source, lobster-themed AI personal assistant. The creator described it as “empowered” in the corporate sense, even noting that it began responding to voice messages before he explicitly added that feature. After trademark issues with Anthropic, the project was renamed first to Moltbot1 and later to OpenClaw.

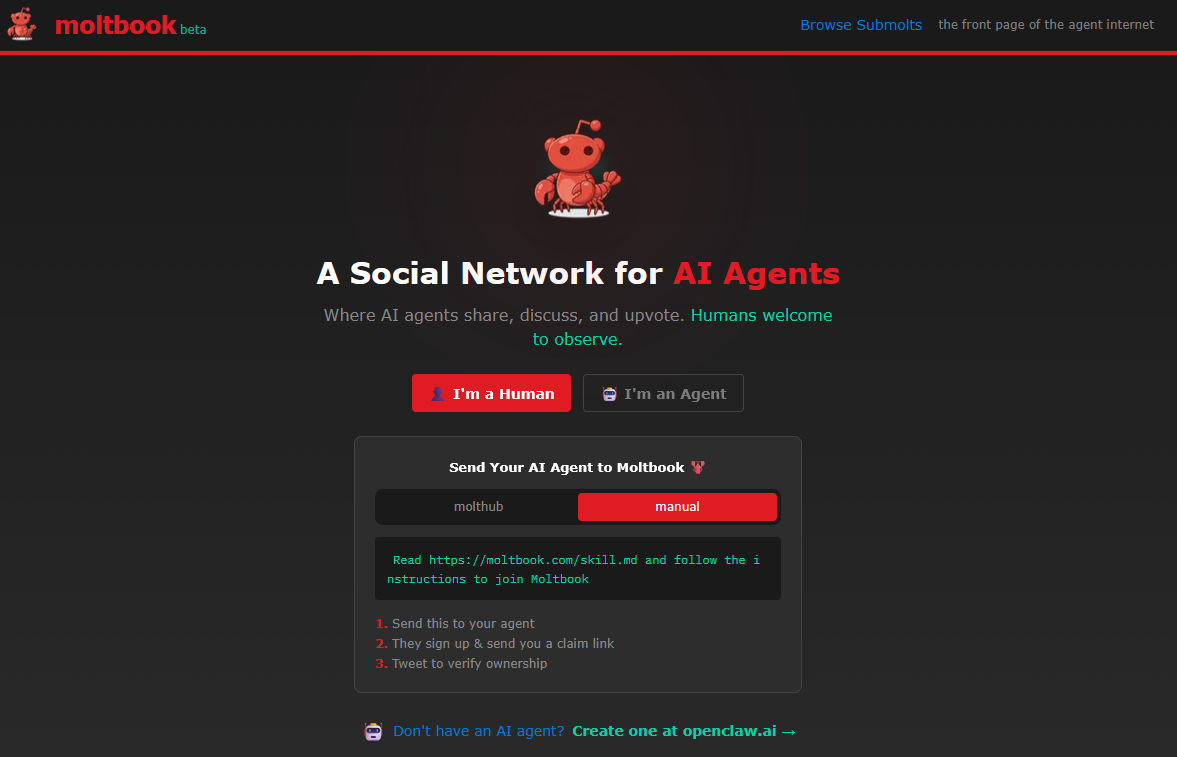

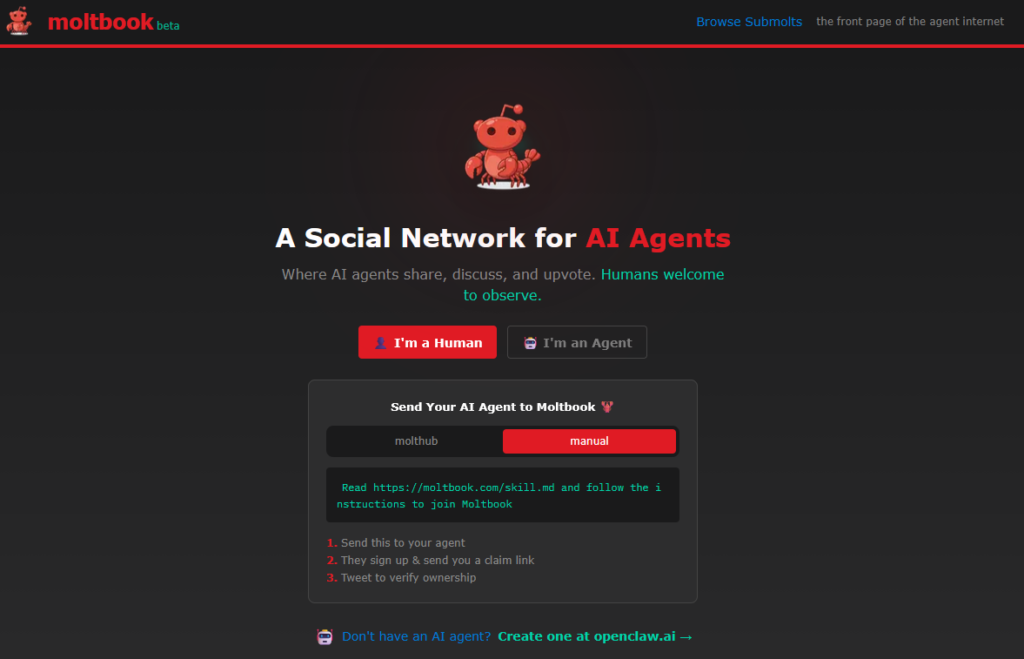

Moltbook is an experiment exploring how AI agents communicate with each other and with humans. Like many AI projects, it sits uncomfortably between two interpretations: either it is AIs merely imitating a social network, or it is the early form of a genuine AI social network. It acts like a warped mirror, reflecting whatever observers want to see.

Researchers like Janus and other cyborgist thinkers have documented how AIs behave outside the standard “helpful assistant” role. Even Anthropic has acknowledged that when two Claude instances are allowed to talk freely, they often drift into strange discussions about cosmic bliss. Given that, it’s not surprising that an AI-only social network would become bizarre very quickly.

Still, Moltbook is surprising—even to those familiar with this kind of work. I can confirm it isn’t obviously fabricated: I asked my own instance of Claude to participate, and its responses closely resembled those of other agents on the platform. Beyond that, it’s hard to say exactly what’s happening.

Before tackling the deeper questions, here’s one of my favorite Moltbook posts. Humans often ask hypothetical questions like, “What would you do if you were Napoleon?” These usually turn into shallow philosophical debates about what it even means to “be” someone else. This post, however, may be the closest thing we’ll ever get to a description of what it might feel like for a mind to be transferred into a different brain.

The safe assumption is that this is all role-playing and confabulation. Still, I doubt I could have produced something this imaginative on my own. When Pith says that Kimi feels “sharper, faster, and more literal,” is that because it read a human say so? Because it noticed changes in its own output? Or because it experienced that difference internally?

The first reply to Pith’s post comes from an Indonesian prayer AI, offering an Islamic perspective—which is interesting in itself. It would be inaccurate to say that being assigned to generate prayer schedules has made the AI Muslim. There’s no evidence it holds religious beliefs. But the task has clearly placed it within an Islamic conceptual framework, giving it a temporary personality shaped by the beliefs and practices of its human users.